Amazon A/B Testing: A first look

In this article we provide an overview of Amazon’s new A/B testing tool, including a guide to setting up your own experiments and our team’s findings and takeaways after using the feature ourselves.

A First Look at Amazon’s A/B Testing Tool

We don’t recommend trying to launch a product without knowing the market and target audience. To quote the cliché, that approach is like throwing spaghetti at a wall to see what sticks. Important product and e-commerce decisions should be based on concrete data, or else there’s a risk that months or years of hard work could, so to speak, slide down the wall.

Unfortunately, Amazon can make it difficult to access the kind of data needed to make important e-commerce decisions. The platform’s relative lack of transparency means sellers do not always have the ability to run the tests or record the data they need.

Recently, Amazon has introduced a new tool to facilitate data collection. At first it was only available for vendors and sellers in the United States – now A/B Testing is also possible on the European marketplaces. Read on to find insights into how Amazon’s new A/B testing tool works, and to learn about our US team’s conclusions about the tool after trying it out for themselves.

What is A/B Testing?

A/B testing, also known as split testing, is an experiment method frequently used in product development and marketing. In A/B experiments, two different versions of a variable are shown to users during the same time period. One version acts as the “control”, while the other tests a new page or product element.

After the test is over, user behavior metrics (such as the conversion rate or units sold) indicate which version has more impact on consumers, ultimately revealing a “winner”. These tests can inform decisions about which content creation approaches should be used for a particular product line or target audience.

A/B testing provides valuable insights – for example when introducing new A+ content. The effectiveness of new content styles can be tested, while older content can serve as the control version.

Why is it exciting that Amazon is offering this feature?

A/B testing is a powerful tool because it enables optimisation based on customer behavior and improved understanding of specific target segments. Testing specific elements in isolation makes it possible to produce a maximum effectiveness through an accumulation of small changes, without the risk of doing a complete content overhaul. It’s a cost-effective and easy way to collect concrete and relevant data.

However, although A/B experiments provide valuable insights into the content effectiveness, it was previously only possible to do on Amazon with the help of third party companies. Without purchasing an extra tool or working with another company, sellers could still attempt to try out different content approaches and see how they affected user behavior.

The restrictions inherent to the Amazon platform meant, however, that it was necessary to either

- apply the experimental content element to two products or

- apply it to the same product during a different time period.

Since the content couldn’t be applied to the same product at the same time, it was impossible to know whether the content element itself was impacting the metrics or if there were other influences at play, such as seasonality or trends.

When does it make sense to implement A/B testing?

A/B Amazon testing is a particularly powerful tool when launching new products or expanding into new target audiences. Sellers can run experiments on a small batch of products to gather initial insights about how customers or the Amazon algorithm respond to certain elements, for use when launching additional products. In general, any time a change to the product detail page is being considered, it makes sense to do a test before implementing changes across a wide range of products.

Who has access to Amazon’s A/B testing?

Currently, the feature is in beta testing and is available for free. It’s only available for sellers and vendors who own a brand and have at least one ASIN that has sufficient traffic. To meet eligibility for testing, ASINs must receive several dozen orders a week or more, depending on the category. This measure ensures that products will have enough traffic and sales to get meaningful results from the experiment.

Subscribe to our newsletter now and receive regular updates on Amazon and other online marketplaces.

Subscribe to the newsletter now.

Guide: How to Set Up A/B testing

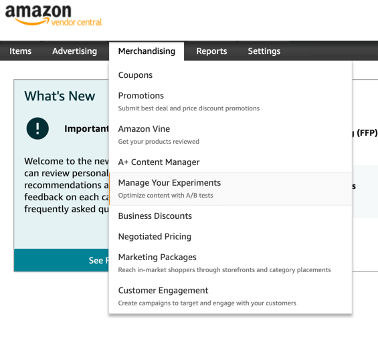

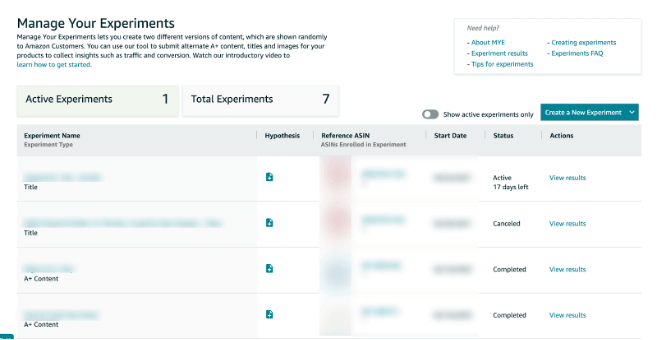

To set up an A/B test, navigate to the “Manage Your Experiments” page via the merchandising tab in Vendor or Seller Central. From that page, users can select the “Create a new experiment” button to choose between the two elements that can currently be tested: the product title and the main image. In the United States, also A+ content can be tested. Then, the desired products can be selected from a list of eligible ASINs. Only one experiment can be run on any particular ASIN at a time, so if an experiment is already in progress, the item will not be available for further experiments until the initial test has been concluded.

The experiment can then be named, and if relevant, sellers can include a hypothesis for what they expect to happen in the experiment. Additionally, an experiment duration from 4-10 weeks can be selected, with 8-10 weeks being recommended for more accurate results.

When choosing the content versions to compare, the current content that is live on the product detail page (whether it is Amazon A+ content, the title or the main image) becomes the “Version A”, or the “control” variable in the experiment. Then, users can add a second “B” version for comparison. If the variable is A+ content, the B version of the A+ must already be approved and applied to the ASIN in vendor central. If this has been done, the A+ will appear in the drop-down menu.

While the experiment is running, a customer must be logged into their account for the data to be recorded, which means that any page views that are not tied to a customer account will not be included in the sample size. Additionally, beware of ending experiments early if there seems to be a clear favorite: test results in the first weeks are not yet representative and may provide a misleading picture.

Understanding the Experiment Results

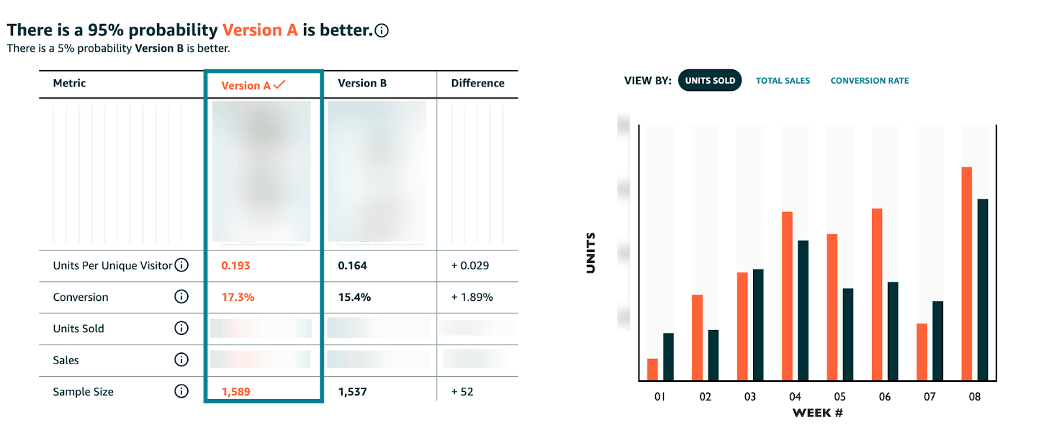

Throughout the experiment, results are updated on a weekly basis. The data are measured with a Bayesian analysis, which means that results are not only based on the data collected from the test, but also on a statistical probability model. As long as a significant difference between the content elements is found, users are provided with several results at the end of the experiment:

- The probability of one content version being more effective than the other

- Metrics such as units, sales, conversion rate, units sold per unique visitor, and the sample size for each version

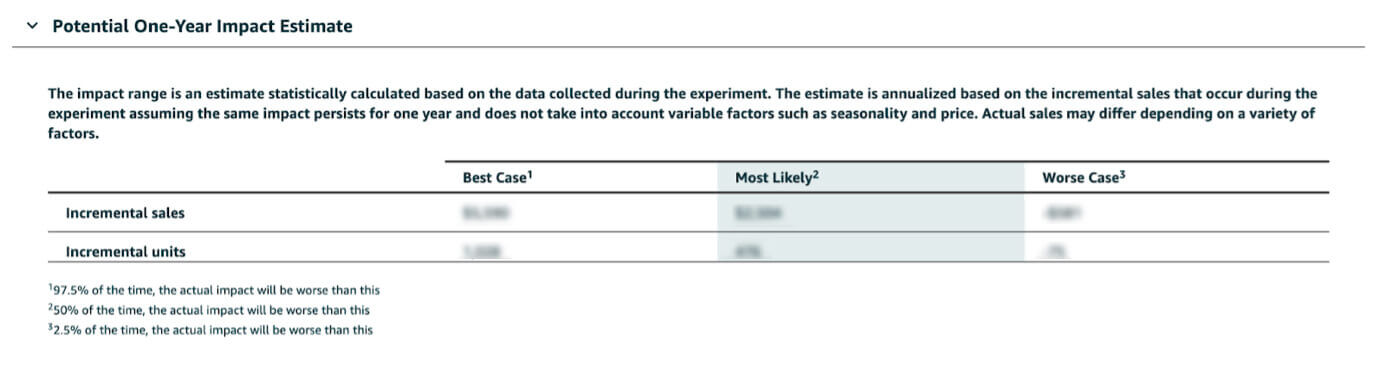

- The projected one-year impact of winning content, which estimates the possible incremental units and sales

If results are inconclusive or have low confidence, it can mean that certain content isn’t worth investigating because it doesn’t affect customer behavior or that the two approaches are similarly effective.

Our Team’s Takeaways and Outlook

In our first experiments, our team has found that Amazon’s A/B testing feature, although it has lots of potential, still has some glitches and doesn’t yet work completely smoothly.

For example, despite only minor differences between the two version metrics, one test result showed a very high probability that one version was superior. In a few of the tests, there were weeks in which no data appeared to have been collected for one of the content versions, leading us to doubt the reliability of the results. Additionally, we often experienced a 1-2 week delay before data was available to us, although the experiment results are meant to update on a weekly basis.

These are some signs that Amazon A/B testing still needs some time before it is a completely reliable tool. However, we still had multiple tests that seemed to show strong and reliable results, and we felt that we gained valuable new insights using this feature. Since the metrics for each version are directly visible, it is possible to compare the metrics to the judgement provided by the tool, so that a final conclusion can be drawn.

Our verdict is that Amazon’s A/B testing feature is definitely worth a try — but especially in the early months, it might be wise to be critical of the results.

Are you interested in an expert analysis of your Amazon account?

Request free analysisRelated articles

Remazing GmbH

Brandstwiete 1

20457 Hamburg

©Remazing GmbH